Your load balancer is less than 8% utilized.

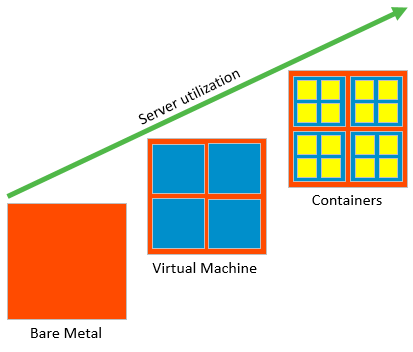

Server infrastructure has made great strides in efficiency over the past few years. Apps have moved from bare metal servers to virtual machines to containers, moving from <10% to over 75% utilization. With each step, the footprint is reduced, enabling increased application density and higher resource utilization. This infrastructure optimization is application server fleet efficiency.

As the efficiency increases, further improvement becomes difficult. Inevitably, CIOs will look to adjacent technologies to demonstrate similar improvements.

Application Proxy Services

First, lets define application services. This broadly includes any set of network technologies that directly interact with the applications, such as DNS, proxies, and load balancers.

Load balancers have long been neglected in the evolution of technology over the years, and are ripe for massive improvements in efficiency. As the network infrastructure closest to applications and servers, many CIOs are asking why they are paying for so much idle hardware.

Below are a few examples of the two primary ways your load balancer can help extend your fleet efficiency.

Force Multiplier

A modern load balancer such as Avi Vantage can be a force multiplier, increasing the amount of efficiency that can be achieved across a server farm. There are a number of common mechanisms, such as HTTP caching, DDoS mitigation, and other common features. But more important is the ability to directly engage in the decision making of the application deployment and scale.

The migration from bare metal to virtual to containers has enabled smaller scale servers. When coupled with orchestrators such as vCenter, AWS or Marathon/Mesos, OpenStack etc, apps can be spun up or down quickly. However, orchestrators sit out of band and do not have visibility into the success, failure, or user experience for an application. In other words, they know how to spin up or down servers, but not when.

Rightsizing Apps

By coupling visibility and analytics with the power of a full proxy load balancer, Avi Vantage learns the health of the application. This enables it to provide feedback to fleet orchestrators, instructing them to kick off the spin up / down process for VMs or containers. For instance, if the server takes 500ms to generate content and CPU is above 80% for a period of 5 minutes, tell Docker to spin up another instance of an app.

Avi Vantage has a number of tools to make this happen:

- Fewest Servers - Load balancing algorithm to distribute traffic across the fewest number of servers without hitting capacity limits.

- AutoScale Policy - Template to define when and how often to spin up or down app server capacity. For instance, only scale up servers once in a 5 minute window using a set of criteria.

- 500+ metrics & events - Use any combination of this extensive list of criteria as the basis of scaling action.

- ControlScript - When conditions are met, execute a custom script that executes from the Controller. This script can send an API call or log into an orchestrator to kick off a server scaling action.

De-provision Apps

In addition to rightsizing the size of app server deployment, a second use case is to apply a policy to simply shut down apps when they are not in use.

Kamal noted in his presentation that Paypal monitored the usage of containers spun up by developers. The majority of containers spun up by developers were used for no more than 1 hour, yet on average they were left on for days. A simple solution is to automatically hibernate the container after a defined period of inactivity, such as 24 hours. The same model can be extended to VMs just as easily.

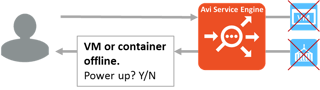

What happens when a user attempts to access that application? Even though the server is down, the virtual service on the load balancer is still listening. The VS can be configured to respond with a generic error page, which includes a button to initiate a script to wake the virtual machine.

This can be done on Avi Vantage through a combination of DataScripts (which respond with a friendly error page) and a ControlScript, which is triggered by the DataScript and sends a message to the orchestrator to wake the container or VM.

In environments such as AWS, this can dramatically reduce the number of VMs, translating into significant cost reduction.

App Proxy Efficiency

The second way app proxies play a role in extending fleet efficiency is through governing their own utilization. Similar to how servers have seen significant improvements by sharding workloads across smaller containers, network services such as load balancing equally benefit from a parallel architecture. Also similar to VM and container orchestrators such as vCenter or Mesos, app proxies require a centralized orchestrator to manage the app service fabric.

With this architecture, application proxies can be 8x more efficient.

Dynamic Scale

Traditional load balancer appliances must be pre-purchased based on guestimates of traffic requirements 5 years into the future. If traffic is now 5gb/s, and traffic could triple in 5 years, then you are looking at 15gb capacity. But you also need to take into account spikes in traffic, which could double this number. So while your current traffic is 5Gb/s, you have 6x capacity consuming power and rack space in your data center.

Avi Vantage will scale its capacity up or down, on demand. When traffic demands come close to exceeding the capability of a Avi Vantage proxy, the Avi Vantage controller will automatically spin up another proxy, adding additional CPU or other resources necessary to handle the increased load. The process is similarly reversed when traffic is reduced.

High Availability

Newer architectures enable better high availability models. Traditional Active/Standby appliances mean half of their capacity is idle. Extending the numbers from the previous use case, this means purchasing 60Gb/s capacity for 5Gb/s of traffic.

Avi Vantage is fully active. The Avi Vantage controller ensures there is always enough capacity in the fabric to absorb a failure.

Conclusion

In the sample math above, a traditional load balancer operates at 8% utilization, which is slightly higher than the industry average. So what is your efficiency?